The highlights of Meta's open - source large language model Llama 4 are as follows:

The highlights of Meta's open - source large language model Llama 4 are as follows:

- Innovative Architecture

- It introduces the Mixture - of - Experts (MoE) architecture for the first time. In the MoE model, only a part of the total parameters is activated for a single token. Meta uses alternating dense layers and MoE layers. In the MoE layer, 128 routing experts and a shared expert are used, and each token is sent to the shared expert and one of the 128 routing experts. This architecture improves computational efficiency during training and inference, and provides higher quality than dense models under a fixed training FLOPs budget. It also improves inference efficiency by reducing model service costs and latency.

- Powerful Multimodality

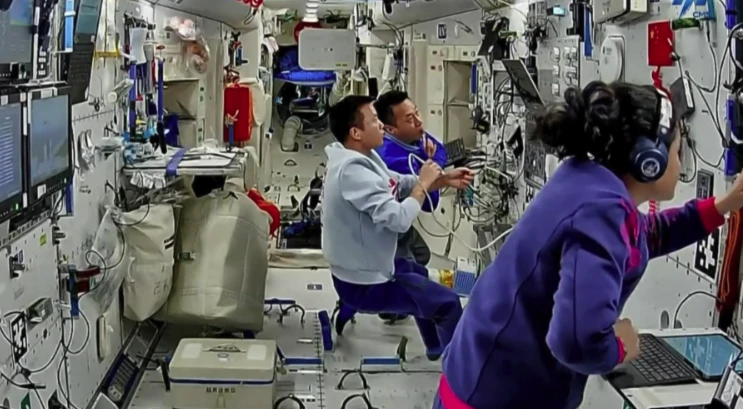

- It is a native multimodal model that uses the early - fusion technique to seamlessly integrate text and visual tokens into a unified model backbone. This allows the model to be jointly pre - trained with a large amount of unlabeled text, image, and video data. It can process and integrate various types of data such as text, video, images, and audio, and convert content between these formats. The vision encoder uses an upgraded version of MetaCLIP, which is trained simultaneously with a frozen - parameter Llama model to better adapt to the LLM.

- Diverse Versions with Distinct Advantages

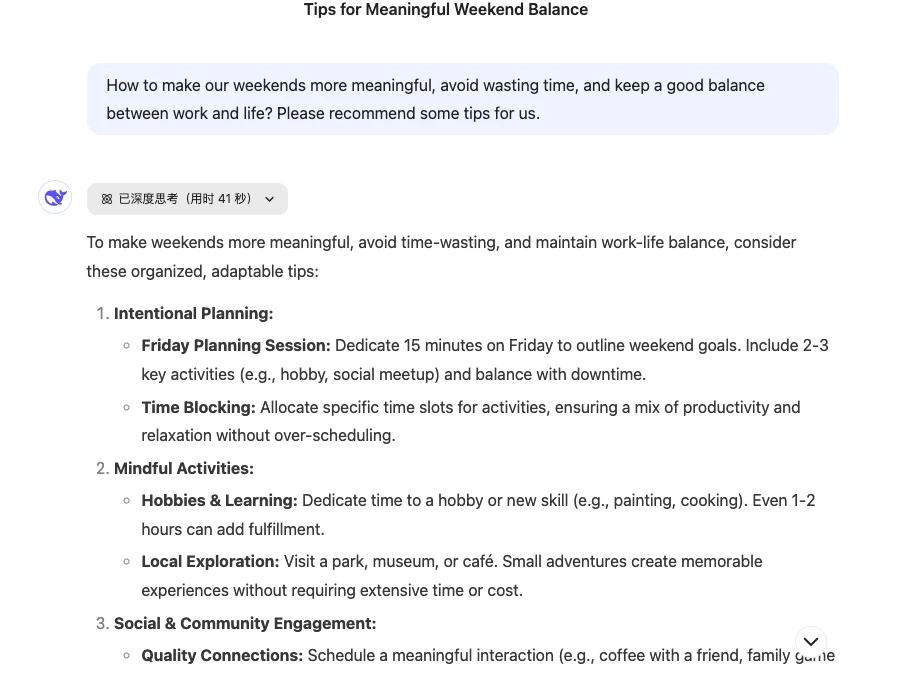

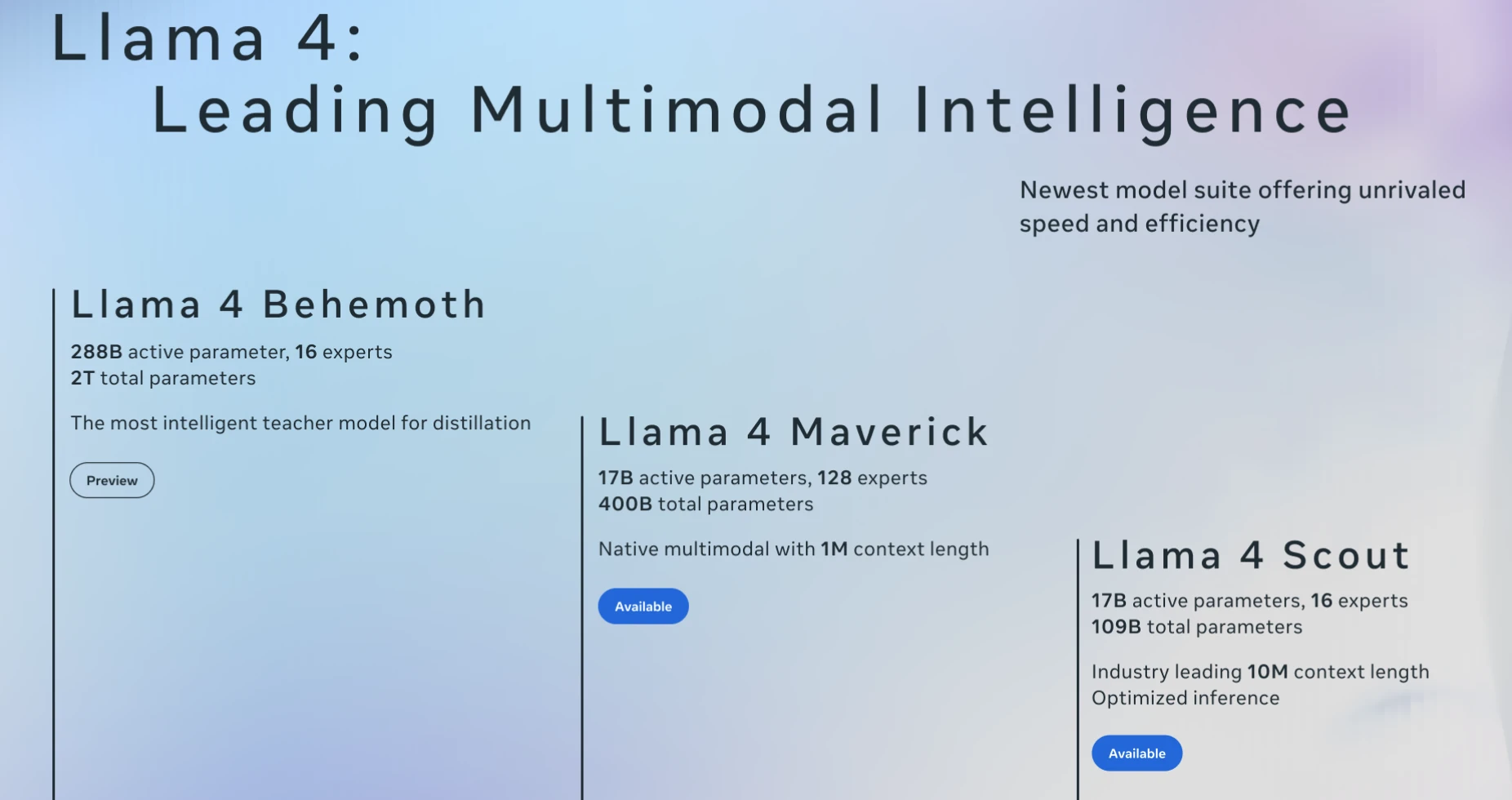

- Llama 4 Scout: It is known as the "best - in - class global multimodal model". It has 16 experts, 1.7 billion active parameters, and 109 billion total parameters, and can run on a single NVIDIA H100 GPU (using Int4 quantization). It supports a context window of up to 10 million tokens and can handle up to 5 million words of text. In many benchmark tests, its performance exceeds that of well - known models such as Gemma 3, Gemini 2.0 Flash - Lite, and Mistral 3.1, and it performs particularly well in the field of image localization. It is suitable for scenarios such as document summarization and large - code - library reasoning.

- Llama 4 Maverick: It has 128 experts, 1.7 billion active parameters, and 400 billion total parameters, and is adapted to a single H100 host. It defeats GPT - 4o and Gemini 2.0 Flash in various benchmark tests, and achieves results equivalent to the new DeepSeek - v3 in terms of reasoning and programming, with only half the active parameters of DeepSeek - v3. Its experimental chat version has an ELO score of 1417 on LMArena, and the inference cost per 1M tokens of input and output is in the range of $0.19 - 0.49, which is close to or even lower than that of DeepSeek v3.1 ($0.48). It is very suitable for general - purpose assistant and chat - based application scenarios.

- Llama 4 Behemoth: It has 16 experts, 288 billion active parameters, and nearly 2 trillion total parameters. Its performance in multiple STEM benchmark tests is better than that of GPT - 4.5, Claude Sonnet 3.7, and Gemini 2.0 Pro. It will serve as a teacher model for the collaborative distillation of models such as Maverick. It is pre - trained with 30T multimodal tokens on 32000 GPUs using FP8 precision. After its official release in the future, it will provide strong support for complex task processing and cutting - edge research.

- High Cost - Effectiveness

- The efficient characteristics of Llama 4 significantly reduce the inference cost. For example, the experimental chat version of Llama 4 Maverick has an inference cost per 1M tokens of input and output in the range of $0.19 - 0.49, which is close to or even lower than that of DeepSeek v3.1 ($0.48). Llama 4 Scout and Maverick can process each million input tokens at a cost of 15 cents and 24 cents respectively, which is significantly lower than the cost of models such as GPT - 4 and Claude 3.7 Sonnet.

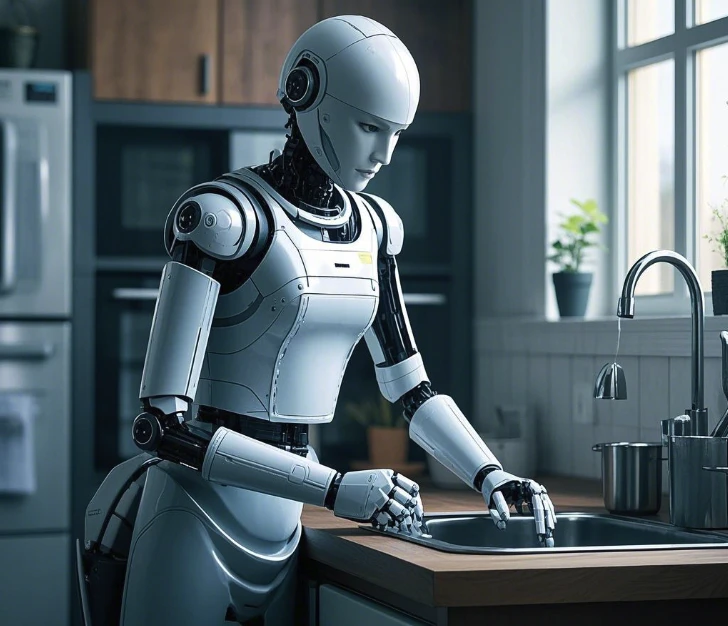

- High Ecological Integration

- Llama 4 can be downloaded from llama.com and Hugging Face, and will soon be available on mainstream cloud and data platforms, edge chips, and global service integrators. In addition, it has been integrated into applications such as WhatsApp, Messenger, Instagram Direct, and the Meta.AI website. Users can try out Meta AI based on Llama 4 on these platforms, which provides convenience for developers and researchers to experiment and integrate, and strongly promotes the innovative application of AI technology in various fields.